Comparisons

Langchain

Separation of Prompts and Code

Langchain has a PromptTemplate class and variants.

from langchain import PromptTemplate

template = """

I want you to act as a naming consultant for new companies.

What is a good name for a company that makes {product}?

"""

prompt = PromptTemplate(

input_variables=["product"],

template=template,

)

prompt.format(product="colorful socks")

# -> I want you to act as a naming consultant for new companies.

# -> What is a good name for a company that makes colorful socks?The issue with this example is that prompts are hard coded as strings in the code. If we devise a better prompt, we need to find it, update and redeploy the code. It doesn’t facilitate using multiple roles to maintain the AI application, e.g., Prompt Designers and Developers.

Prompt Store serves prompts as an API, cleanly separating the roles of prompt management and application logic.

We can pair Langchain with a Prompt Store to avoid hard coding prompt strings.

Fit of core abstractions to problem space

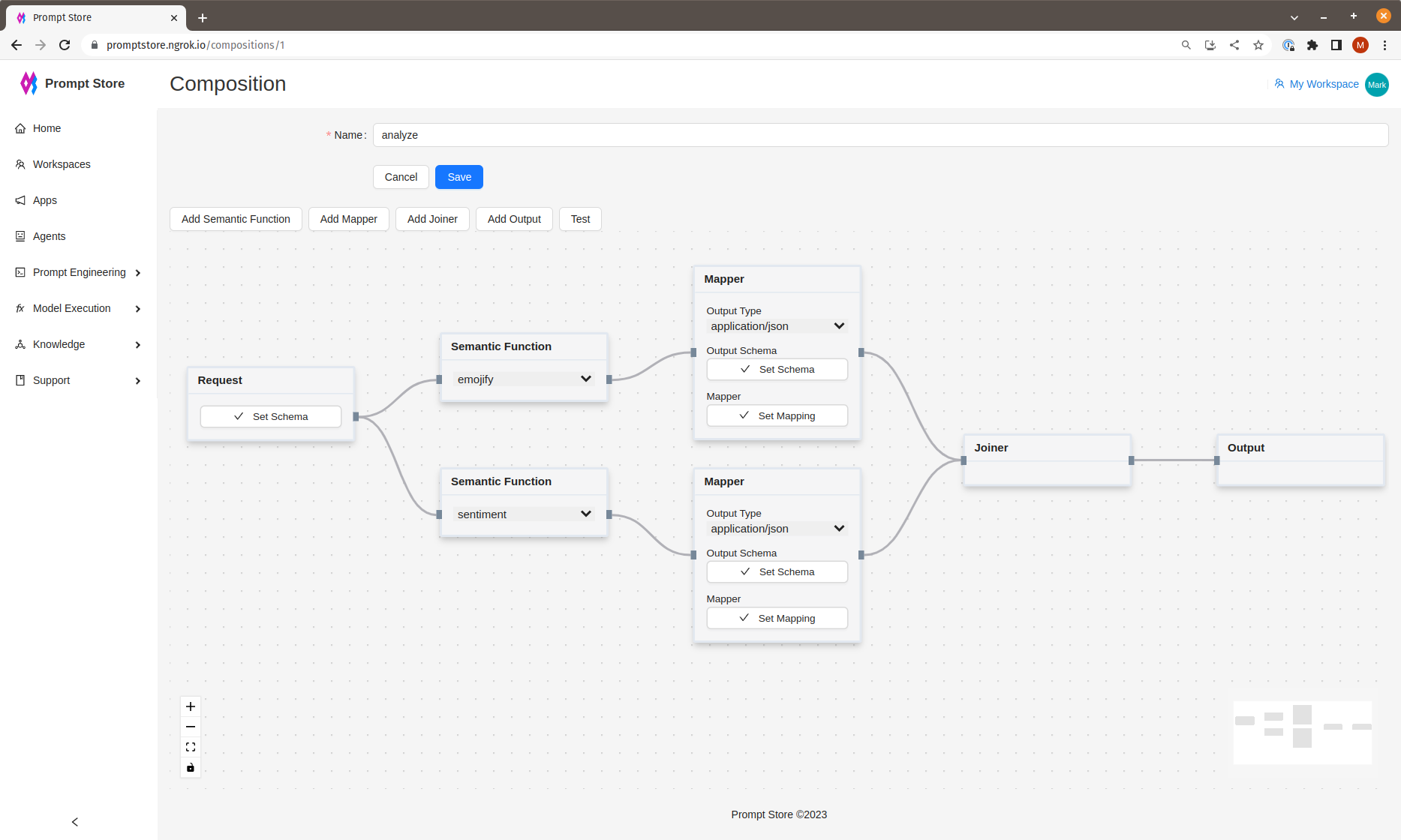

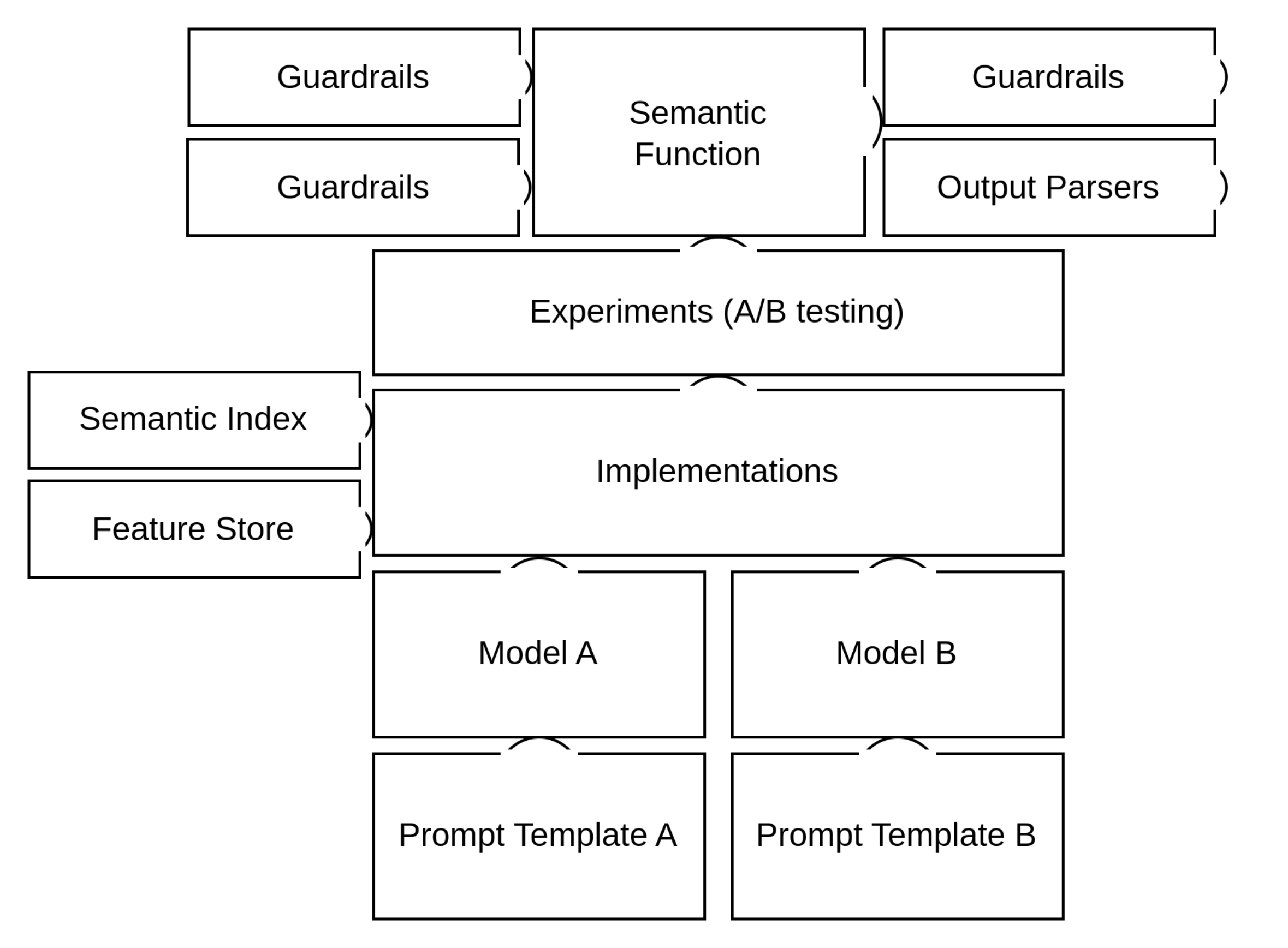

The Prompt Store Semantic Function is equivalent to a Langchain LLMChain. An LLMChain connects a Prompt to a Model.

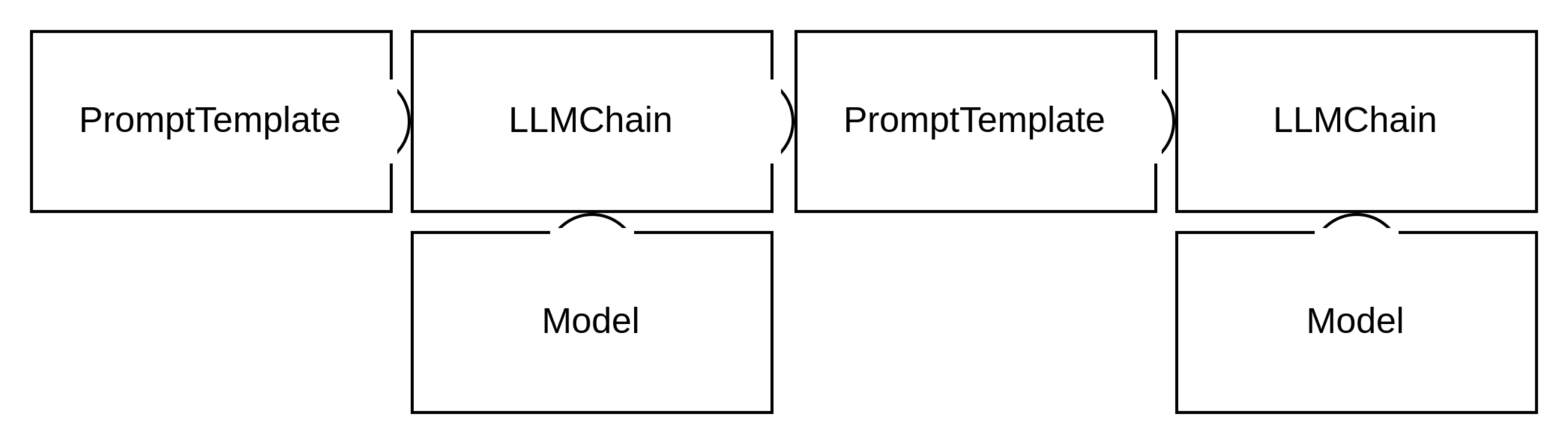

A Semantic Function also connects a Prompt to a Model. In addition, a Semantic Function can:

- Specify multiple implementations using different models and prompts to run experiments to find the best performing combination, or to use a different implementation to meet an operational requirement. For example, there are model trade-offs in reasoning capability vs cost and latency or privacy.

- Connect one or more Semantic Indexes to inject recent or specialised knowledge into the prompt prior to calling the model.

- Apply guardrails such as PII checking and content moderation to model input or outputs.

- Connect functions to live data such as personalisation details from an online feature store.

Langchain attempts to provide a simple abstraction of chaining components together.

Langchain chains

In practice, the role of the LLMChain actually looks more hub-and-spoke like.

Semantic functions as units of execution

Prompt Store uses the Semantic Function as a hub that connects various components of the AI application together. Semantic Functions can still be chained together to create higher-order functions.