Prompts

Definition of a Prompt

A Prompt is an instruction or example provided to a Large Language Model (LLM) to facilitate the expected response for an input.

The process of “prompt engineering” is different from other Natural Language Processing (NLP) services which are designed for a single task, such as sentiment classification or named entity recognition. Instead, the LLM can be used for a range of tasks including content or code generation, summarization, expansion, conversation, creative writing, translation, and more.

A popular structure for prompts starts with telling the LLM what role it should play. Then the prompt states what information will be provided, followed by what should be done with it, being as explicit as possible. Providing examples and expected output helps.

There is no prescribed formula, and techniques differ. It’s important to remember that LLMs are “next word prediction” machines. As much context as can be provided helps ChatGPT construct a “story” that extends or completes the user’s input.

Effective Prompts are critical to AI Apps

The quality of prompts is the biggest driver of quality outputs from Large Language Models. It is necessary to regularly improve the prompts as we observe the performance of the application or as new techniques become known. What works best for one model may not work as well for a different model or model version. We need to measure which prompts produce the best outputs for our application.

Once a good prompt is found, we naturally want the ability to share it within out team, so other AI engineers can learn from it or adapt it to a related purpose.

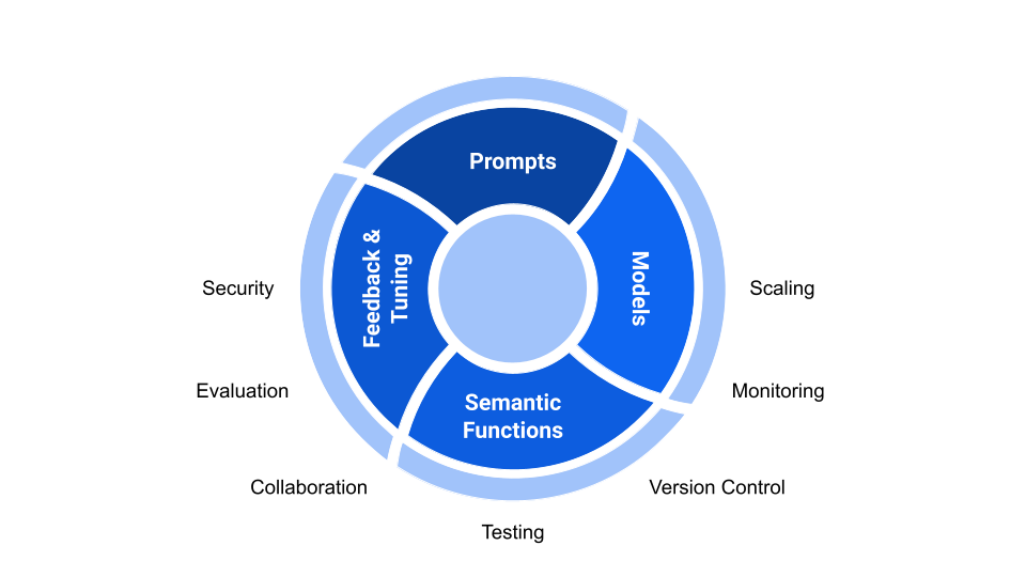

Prompt management is the core reason for Prompt Store.

Beyond the need to iterate, the next challenge is to feed prompts with dynamic information such as just-in-time knowledge or personalisation variables. In a robust application, these variables need to be validated otherwise models also suffer from garbage in - garbage out. This includes variables that were expected but are missing or are the wrong data type.

We call the component that injects live data into a prompts, and calls the Large Language Model, a Semantic Function.

As with prompts, once a good function is defined, we want the ability to share it, or compose it into higher-level functions.

So Prompt Store includes the ability to execute semantic functions and enable various guardrails such as: cost accounting, PII checking, input checking, output parsing, model monitoring, etc.