Interface with Foundation Models

Foundation models such as GPT-4, Claude, and PaLM have different capabilities. For example, GPT-4 has function

parameters for tool suport, PaLM includes a top_k parameter, and so on.

In addition, there is the standard text completion API, and more recently, the Chat API. Some vendors support both, while others support just the completion API.

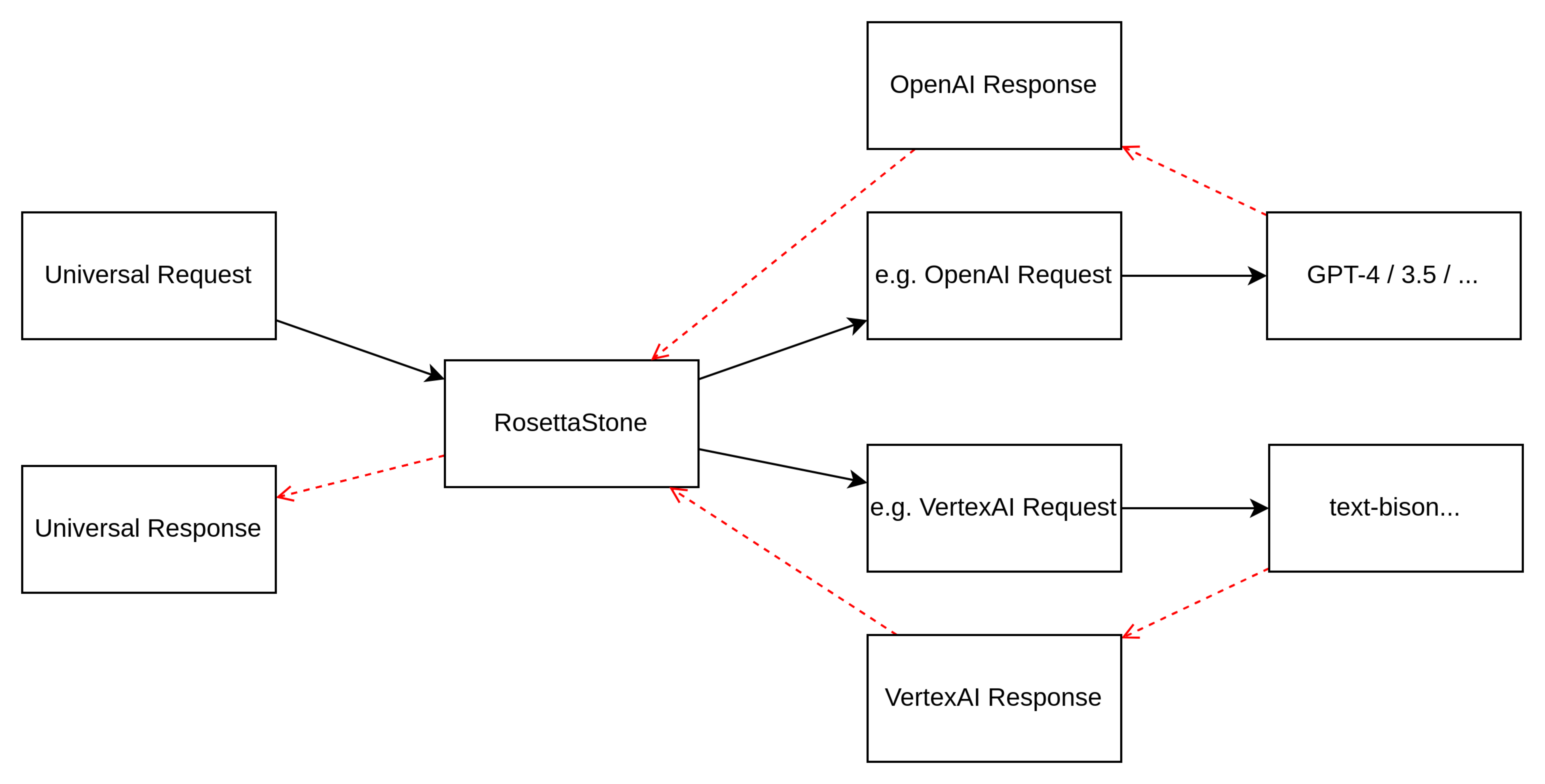

Prompt Store internally uses a universal superset and downgrades to interface with various providers and API versions. This is in contrast to using the lowest common denominator, or having lots of model-specific code throughout the application.

For example, PaLM delineates between context, examples, history, and current prompts. Whereas OpenAI uses messages that have different roles. PaLM context can be translated to an OpenAI System Message. Examples can be represented as user-assistant message pairs.

Similarly, instead of having code that switches between completion and chat APIs depending on model capability, Prompt Store uses the chat representation, which has a richer grammar, and translates this to a completion request when required.

A “RosettaStone” module is responsible for request/response translation. Only this module needs to be extended to support new model APIs.