Prompt Management

Prompts are the core of the system.

Create New Prompts

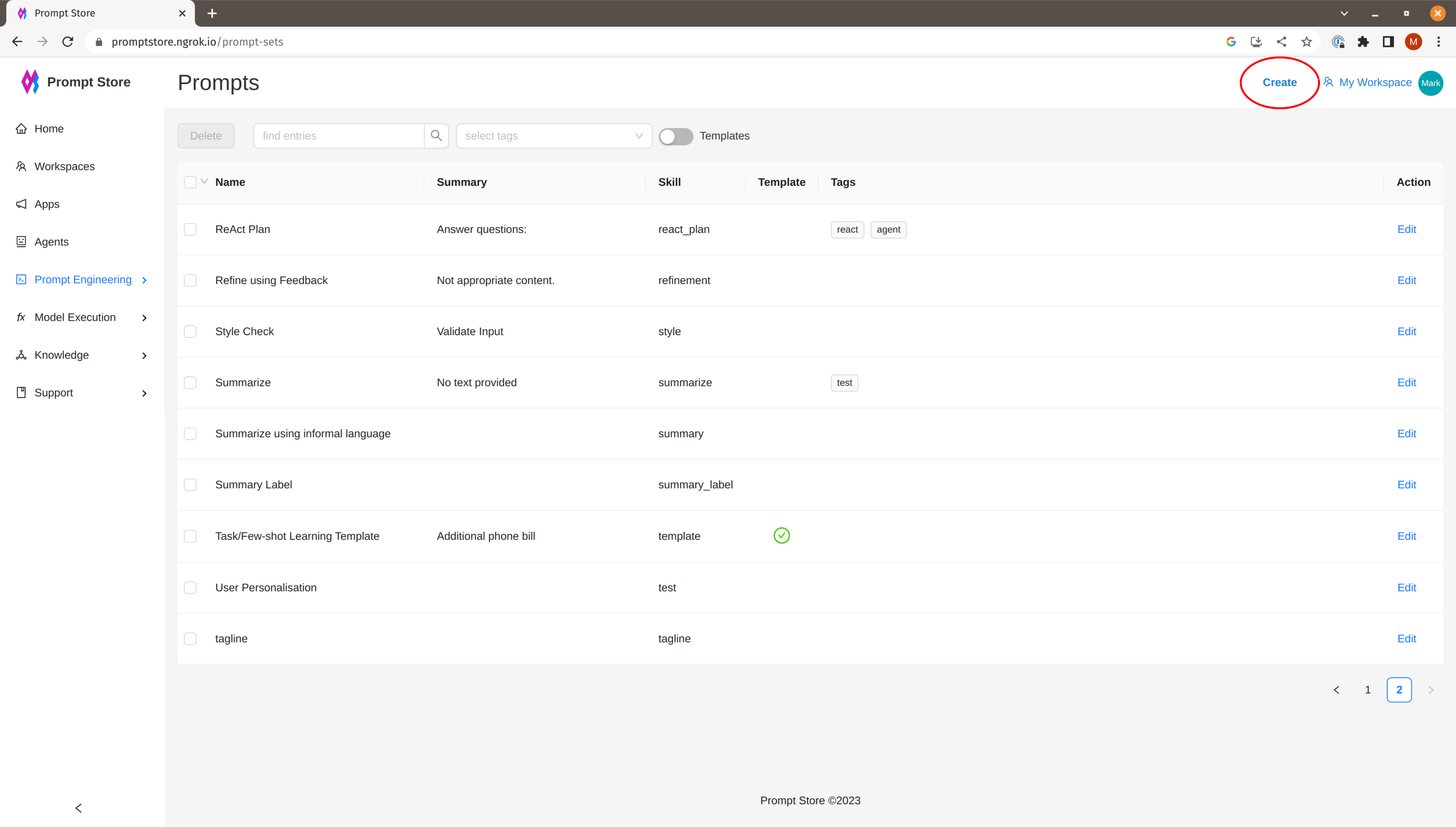

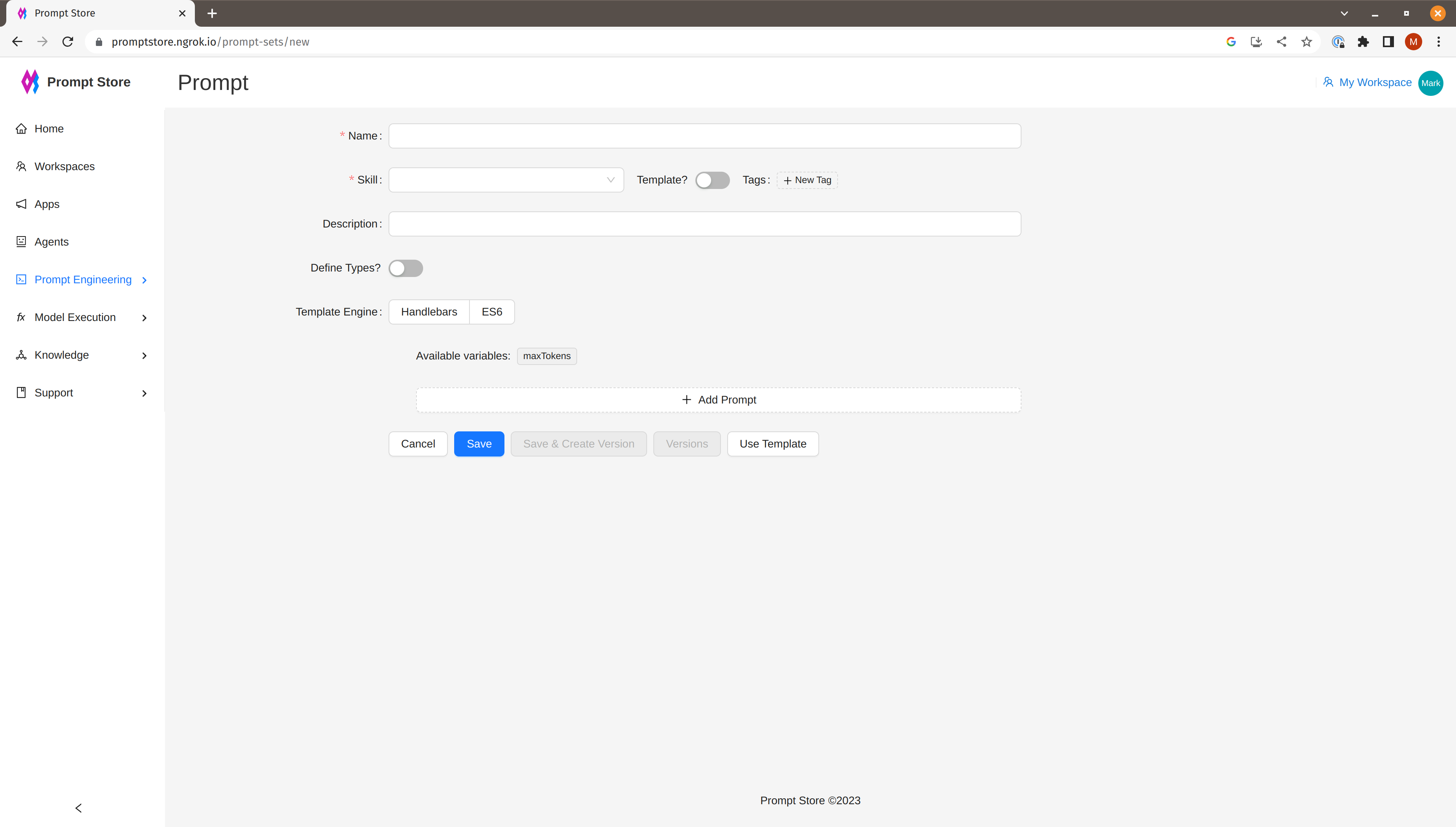

To view the list of prompts or create a new prompt, click on “Prompts” under “Prompt Management” in the left side menu.

Click “Create” in the top Nav bar to create a new prompt.

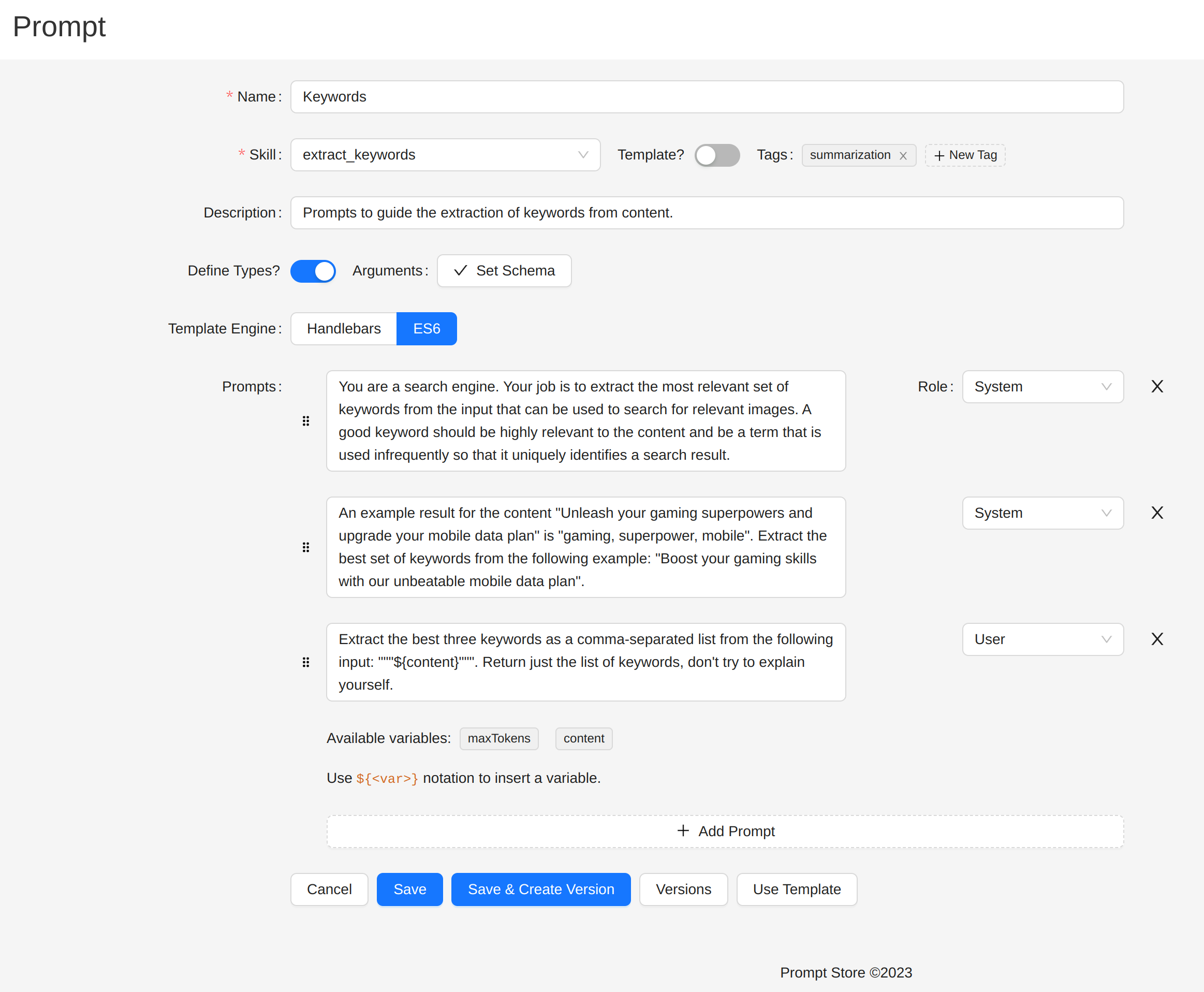

To find a prompt, other than by ID, we can attach a skill and one or more tags, then search by skill or tag.

Validate Inputs

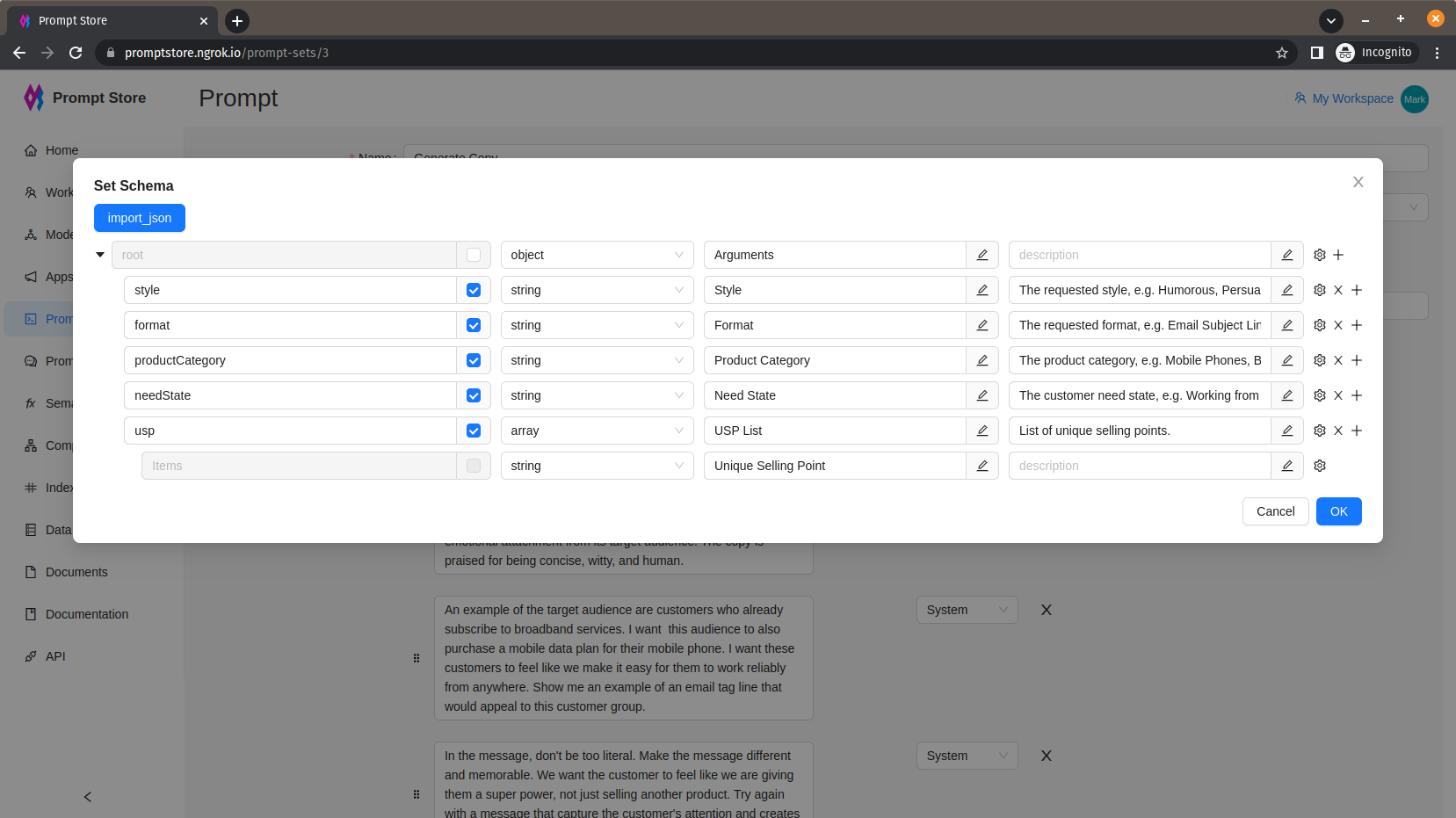

An input schema can be specified using JSON Schema.

When specified, calls to semantic functions using the prompt will test inputs against the schema. This ensures that prompts produce the expected results and are not degraded because of missing inputs.

Prompt Format

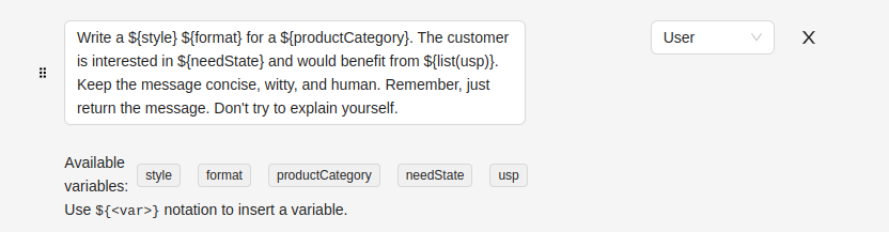

Multi-message prompt formats are supported. The chat completion API will accept a list of prompts, categorized as System, Assistant, and User.

System Role

The system role also known as the system message is included at the beginning of the array. This message provides the initial instructions to the model. You can provide various information in the system role including:

- A brief description of the assistant

- Personality traits of the assistant

- Instructions or rules you would like the assistant to follow

- Data or information needed for the model, such as relevant questions from an FAQ

You can customize the system role for your use case or just include basic instructions. The system role/message is optional, but it’s recommended to at least include a basic one to get the best results.

Assistant and User Roles

After the system role, you can include a series of messages between the user and the assistant.

{"role": "user", "content": "What is thermodynamics?"}To trigger a response from the model, you should end with a user message indicating that it’s the assistant’s turn to respond. You can also include a series of example messages between the user and the assistant as a way to do few shot learning.

A series of intermediate user-assistant message pairs can be used to provide few-shot learning examples.

{"role": "system", "content": "Assistant is an intelligent chatbot designed to help users answer their tax related questions. "},

{"role": "user", "content": "When do I need to file my taxes by?"},

{"role": "assistant", "content": "In 2023, you will need to file your taxes by April 18th. The date falls after the usual April 15th deadline because April 15th falls on a Saturday in 2023. For more details, see https://www.irs.gov/filing/individuals/when-to-file."},

{"role": "user", "content": "How can I check the status of my tax refund?"},

{"role": "assistant", "content": "You can check the status of your tax refund by visiting https://www.irs.gov/refunds"}Inserting variables

Variables can be injected into prompts. The values may be sourced from:

- Arguments to the semantic function that uses the prompt

- Context from an Index - a semantic search index backed by a Vector Store

- An Online Feature Store

The list of available variables is taken from the Arguments Schema.

Creating versions

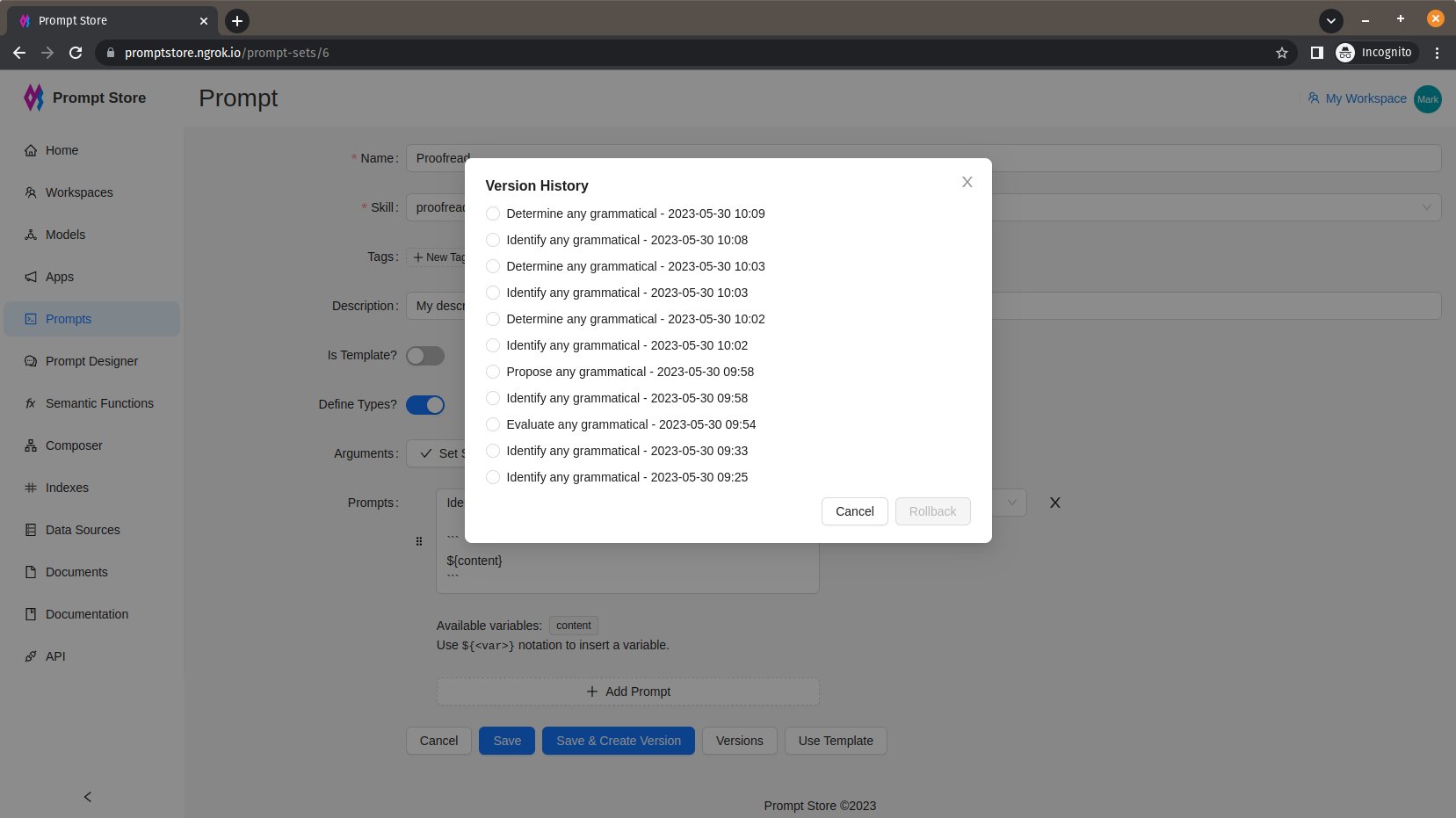

The prompt can be saved as a new version by clicking on “Save & Create Version”.

Previous versions are shown by clicking on “Versions”.

To rollback to an earlier version, select the version from the Versions window and click Rollback. The current version is automatically saved as a new version.

Templates

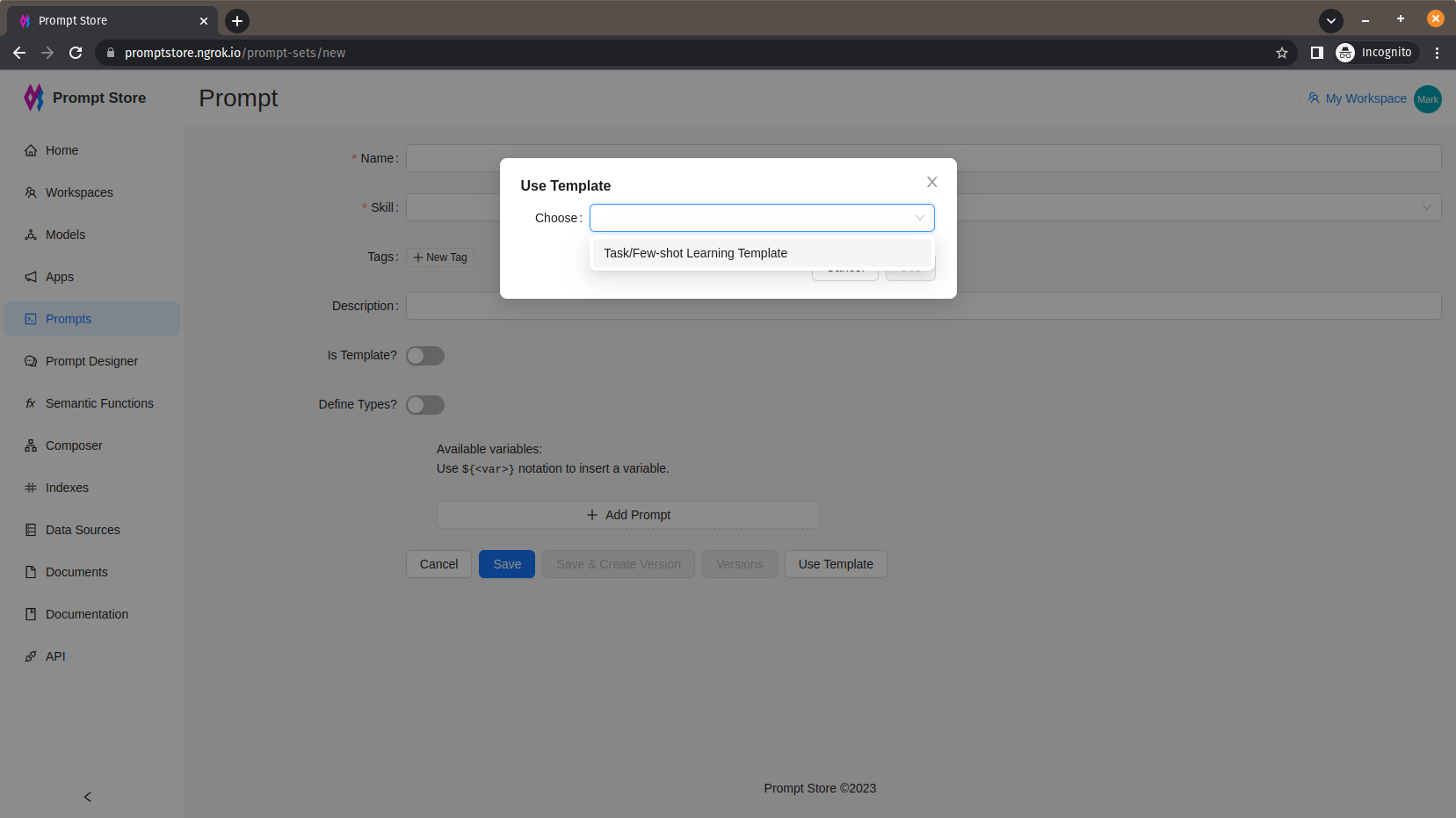

A prompt can be saved as a template by sliding on the “Is Template” switch. A template is used to share best practices and kick-start a new prompt.

When using a template to start a new prompt, open the new prompt form and click “Use Template”.